Artificial intelligence has moved beyond experimentation — it’s powering search engines, recommender systems, financial models, and autonomous vehicles. Yet one of the biggest hurdles standing between promising prototypes and production impact is deploying models safely and reliably. Recent research notes that while 78 percent of organizations have adopted AI, only about 1 percent have achieved full maturity. That maturity requires scalable infrastructure, sub‑second response times, monitoring, and the ability to roll back models when things go wrong. With the landscape evolving rapidly, this article offers a use‑case driven compass to selecting the right deployment strategy for your AI models. It draws on industry expertise, research papers, and trending conversations across the web while highlighting where Clarifai’s products naturally fit.

Quick Digest: What are the best AI deployment strategies today?

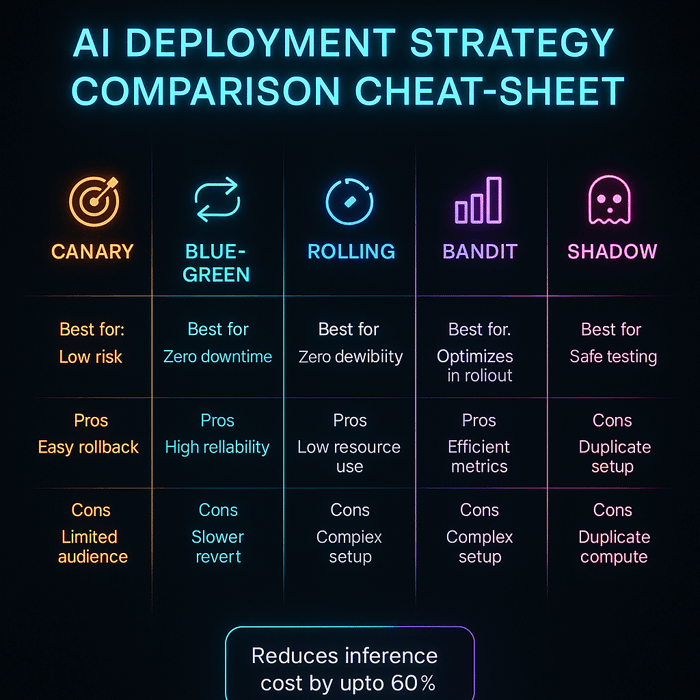

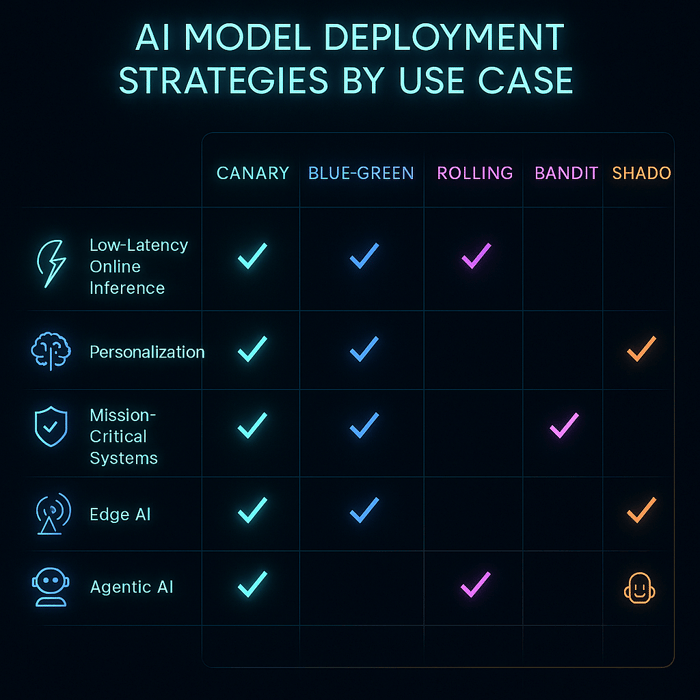

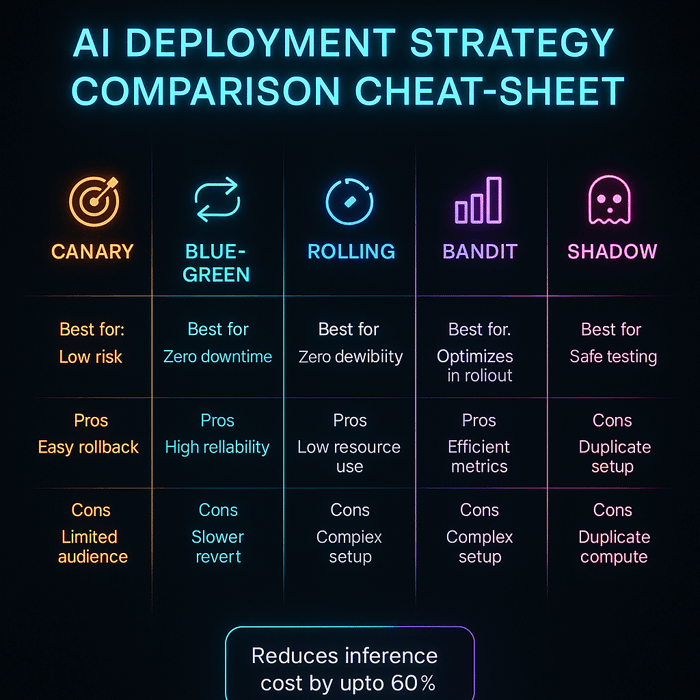

If you want the short answer: There is no single best strategy. Deployment techniques such as shadow testing, canary releases, blue‑green rollouts, rolling updates, multi‑armed bandits, serverless inference, federated learning, and agentic AI orchestration all have their place. The right approach depends on the use case, the risk tolerance, and the need for compliance. For example:

- Real‑time, low‑latency services (search, ads, chat) benefit from shadow deployments followed by canary releases to validate models on live traffic before full cutover.

- Rapid experimentation (personalization, multi‑model routing) may require multi‑armed bandits that dynamically allocate traffic to the best model.

- Mission‑critical systems (payments, healthcare, finance) often adopt blue‑green deployments for instant rollback.

- Edge and privacy‑sensitive applications leverage federated learning and on‑device inference.

- Emerging architectures like serverless inference and agentic AI introduce new possibilities but also new risks.

We’ll unpack each scenario in detail, provide actionable guidance, and share expert insights under every section.

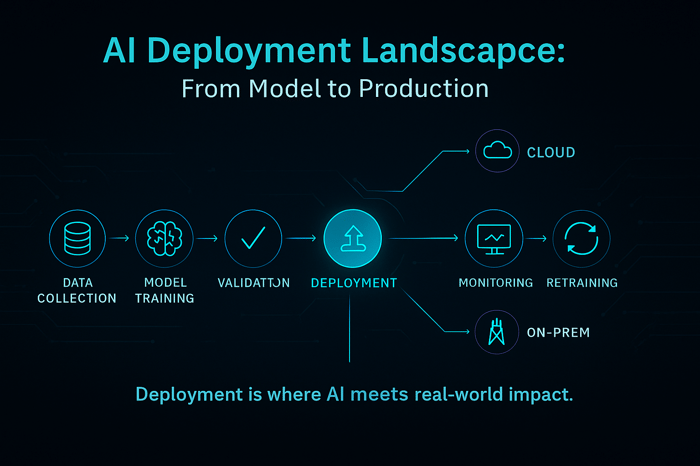

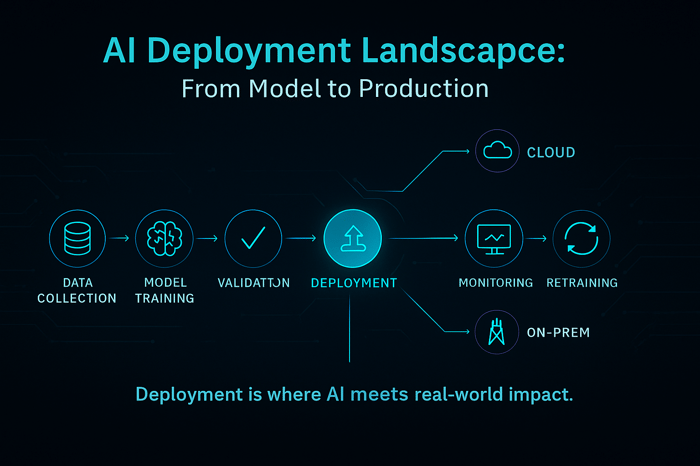

Why model deployment is hard (and why it matters)

Moving from a model on a laptop to a production service is challenging for three reasons:

- Performance constraints – Production systems must maintain low latency and high throughput. For a recommender system, even a few milliseconds of additional latency can reduce click‑through rates. And as research shows, poor response times erode user trust quickly.

- Reliability and rollback – A new model version may perform well in staging, but fails when exposed to unpredictable real‑world traffic. Having an instant rollback mechanism is vital to limit damage when things go wrong.

- Compliance and trust – In regulated industries like healthcare or finance, models must be auditable, fair, and safe. They must meet privacy requirements and track how decisions are made.

Clarifai’s perspective: As a leader in AI, Clarifai sees these challenges daily. The Clarifai platform offers compute orchestration to manage models across GPU clusters, on‑prem and cloud inference options, and local runners for edge deployments. These capabilities ensure models run where they are needed most, with robust observability and rollback features built in.

Expert insights

- Peter Norvig, noted AI researcher, reminds teams that “machine learning success is not just about algorithms, but about integration: infrastructure, data pipelines, and monitoring must all work together.” Companies that treat deployment as an afterthought often struggle to deliver value.

- Genevieve Bell, anthropologist and technologist, emphasizes that trust in AI is earned through transparency and accountability. Deployment strategies that support auditing and human oversight are essential for high‑impact applications.

How does shadow testing enable safe rollouts?

Shadow testing (sometimes called silent deployment or dark launch) is a technique where the new model receives a copy of live traffic but its outputs are not shown to users. The system logs predictions and compares them to the current model’s outputs to measure differences and potential improvements. Shadow testing is ideal when you want to evaluate model performance in real conditions without risking user experience.

Why it matters

Many teams deploy models after only offline metrics or synthetic tests. Shadow testing reveals real‑world behavior: unexpected latency spikes, distribution shifts, or failures. It allows you to collect production data, detect bias, and calibrate risk thresholds before serving the model. You can run shadow tests for a fixed period (e.g., 48 hours) and analyze metrics across different user segments.

Expert insights

- Use multiple metrics – Evaluate model outputs not just by accuracy but by business KPIs, fairness metrics, and latency. Hidden bugs may show up in specific segments or times of day.

- Limit side effects – Ensure the new model does not trigger state changes (e.g., sending emails or writing to databases). Use read‑only calls or sandboxed environments.

- Clarifai tip – The Clarifai platform can mirror production requests to a new model instance on compute clusters or local runners. This simplifies shadow testing and log collection without service impact.

Creative example

Imagine you are deploying a new computer‑vision model to detect product defects on a manufacturing line. You set up a shadow pipeline: every image captured goes to both the current model and the new one. The new model’s predictions are logged, but the system still uses the existing model to control machinery. After a week, you find that the new model catches defects earlier but occasionally misclassifies rare patterns. You adjust the threshold and only then plan to roll out.

How to run canary releases for low‑latency services

After shadow testing, the next step for real‑time applications is often a canary release. This approach sends a small portion of traffic – such as 1 percent – to the new model while the majority continues to use the stable version. If metrics remain within predefined bounds (latency, error rate, conversion, fairness), traffic gradually ramps up.

Important details

- Stepwise ramp‑up – Start with 1 percent of traffic and monitor metrics. If successful, increase to 5%, then 20%, and continue until full rollout. Each step should pass gating criteria before proceeding.

- Automatic rollback – Define thresholds that trigger rollback if things go wrong (e.g., latency rises by more than 10 %, or conversion drops by more than 1 %). Rollbacks should be automated to minimize downtime.

- Cell‑based rollouts – For global services, deploy per region or availability zone to limit the blast radius. Monitor region‑specific metrics; what works in one region may not in another.

- Model versioning & feature flags – Use feature flags or configuration variables to switch between model versions seamlessly without code deployment.

Expert insights

- Multi‑metric gating – Data scientists and product owners should agree on multiple metrics for promotion, including business outcomes (click‑through rate, revenue) and technical metrics (latency, error rate). Solely looking at model accuracy can be misleading.

- Continuous monitoring – Canary tests are not just for the rollout. Continue to monitor after full deployment because model performance can drift.

- Clarifai tip – Clarifai provides a model management API with version tracking and metrics logging. Teams can configure canary releases through Clarifai’s compute orchestration and auto‑scale across GPU clusters or CPU containers.

Creative example

Consider a customer support chatbot that answers product questions. A new dialogue model promises better responses but might hallucinate. You release it as a canary to 2 percent of users with guardrails: if the model cannot answer confidently, it transfers to a human. Over a week, you track average customer satisfaction and chat duration. When satisfaction improves and hallucinations remain rare, you ramp up traffic gradually.

Multi‑armed bandits for rapid experimentation

In contexts where you are comparing multiple models or strategies and want to optimize during rollout, multi‑armed bandits can outperform static A/B tests. Bandit algorithms dynamically allocate more traffic to better performers and reduce exploration as they gain confidence.

Where bandits shine

- Personalization & ranking – When you have many candidate ranking models or recommendation algorithms, bandits reduce regret by prioritizing winners.

- Prompt engineering for LLMs – Trying different prompts for a generative AI model (e.g., summarization styles) can benefit from bandits that allocate more traffic to prompts yielding higher user ratings.

- Pricing strategies – In dynamic pricing, bandits can test and adapt price tiers to maximize revenue without over‑discounting.

Bandits vs. A/B tests

A/B tests allocate fixed percentages of traffic to each variant until statistically significant results emerge. Bandits, however, adapt over time. They balance exploration and exploitation: ensuring that all options are tried but focusing on those that perform well. This results in higher cumulative reward, but the statistical analysis is more complex.

Expert insights

- Algorithm choice matters – Different bandit algorithms (e.g., epsilon‑greedy, Thompson sampling, UCB) have different trade‑offs. For example, Thompson sampling often converges quickly with low regret.

- Guardrails are essential – Even with bandits, maintain minimum traffic floors for each variant to avoid prematurely discarding a potentially better model. Keep a holdout slice for offline evaluation.

- Clarifai tip – Clarifai can integrate with reinforcement learning libraries. By orchestrating multiple model versions and collecting reward signals (e.g., user ratings), Clarifai helps implement bandit rollouts across different endpoints.

Creative example

Suppose your e‑commerce platform uses an AI model to recommend products. You have three candidate models: Model A, B, and C. Instead of splitting traffic evenly, you employ a Thompson sampling bandit. Initially, traffic is split roughly equally. After a day, Model B shows higher click‑through rates, so it receives more traffic while Models A and C receive less but are still explored. Over time, Model B is clearly the winner, and the bandit automatically shifts most traffic to it.

Blue‑green deployments for mission‑critical systems

When downtime is unacceptable (for example, in payment gateways, healthcare diagnostics, and online banking), the blue‑green strategy is often preferred. In this approach, you maintain two environments: Blue (current production) and Green (the new version). Traffic can be switched instantly from blue to green and back.

How it works

- Parallel environments – The new model is deployed in the green environment while the blue environment continues to serve all traffic.

- Testing – You run integration tests, synthetic traffic, and possibly a limited shadow test in the green environment. You compare metrics with the blue environment to ensure parity or improvement.

- Cutover – Once you are confident, you flip traffic from blue to green. Should problems arise, you can flip back instantly.

- Cleanup – After the green environment proves stable, you can decommission the blue environment or repurpose it for the next version.

Pros:

- Zero downtime during the cutover; users see no interruption.

- Instant rollback ability; you simply redirect traffic back to the previous environment.

- Reduced risk when combined with shadow or canary testing in the green environment.

Cons:

- Higher infrastructure cost, as you must run two full environments (compute, storage, pipelines) concurrently.

- Complexity in synchronizing data across environments, especially with stateful applications.

Expert insights

- Plan for data synchronization – For databases or stateful systems, decide how to replicate writes between blue and green environments. Options include dual writes or read‑only periods.

- Use configuration flags – Avoid code changes to flip environments. Use feature flags or load balancer rules for atomic switchover.

- Clarifai tip – On Clarifai, you can spin up an isolated deployment zone for the new model and then switch the routing. This reduces manual coordination and ensures that the old environment stays intact for rollback.

Meeting compliance in regulated & high‑risk domains

Industries like healthcare, finance, and insurance face stringent regulatory requirements. They must ensure models are fair, explainable, and auditable. Deployment strategies here often involve extended shadow or silent testing, human oversight, and careful gating.

Key considerations

- Silent deployments – Deploy the new model in a read‑only mode. Log predictions, compare them to the existing model, and run fairness checks across demographics before promoting.

- Audit logs & explainability – Maintain detailed records of training data, model version, hyperparameters, and environment. Use model cards to document intended uses and limitations.

- Human‑in‑the‑loop – For sensitive decisions (e.g., loan approvals, medical diagnoses), keep a human reviewer who can override or confirm the model’s output. Provide the reviewer with explanation features or LIME/SHAP outputs.

- Compliance review board – Establish an internal committee to sign off on model deployment. They should review performance, bias metrics, and legal implications.

Expert insights

- Bias detection – Use statistical tests and fairness metrics (e.g., demographic parity, equalized odds) to identify disparities across protected groups.

- Documentation – Prepare comprehensive documentation for auditors detailing how the model was trained, validated, and deployed. This not only satisfies regulations but also builds trust.

- Clarifai tip – Clarifai supports role‑based access control (RBAC), audit logging, and integration with fairness toolkits. You can store model artifacts and logs in the Clarifai platform to simplify compliance audits.

Creative example

Suppose a loan underwriting model is being updated. The team first deploys it silently and logs predictions for thousands of applications. They compare outcomes by gender and ethnicity to ensure the new model does not inadvertently disadvantage any group. A compliance officer reviews the results and only then approves a canary rollout. The underwriting system still requires a human credit officer to sign off on any decision, providing an extra layer of oversight.

Rolling updates & champion‑challenger in drift‑heavy domains

Domains like fraud detection, content moderation, and finance see rapid changes in data distribution. Concept drift can degrade model performance quickly if not addressed. Rolling updates and champion‑challenger frameworks help handle continuous improvement.

How it works

- Rolling update – Gradually replace pods or replicas of the current model with the new version. For example, replace one replica at a time in a Kubernetes cluster. This avoids a big bang cutover and allows you to monitor performance in production.

- Champion‑challenger – Run the new model (challenger) alongside the current model (champion) for an extended period. Each model receives a portion of traffic, and metrics are logged. When the challenger consistently outperforms the champion across metrics, it becomes the new champion.

- Drift monitoring – Deploy tools that monitor feature distributions and prediction distributions. Trigger re‑training or fall back to a simpler model when drift is detected.

Expert insights

- Keep an archive of historical models – You may need to revert to an older model if the new one fails or if drift is detected. Version everything.

- Automate re‑training – In drift‑heavy domains, you might need to re‑train models weekly or daily. Use pipelines that fetch fresh data, re‑train, evaluate, and deploy with minimal human intervention.

- Clarifai tip – Clarifai’s compute orchestration can schedule and manage continuous training jobs. You can monitor drift and automatically trigger new runs. The model registry stores versions and metrics for easy comparison.

Batch & offline scoring: when real‑time isn’t required

Not all models need millisecond responses. Many enterprises rely on batch or offline scoring for tasks like overnight risk scoring, recommendation embedding updates, and periodic forecasting. For these scenarios, deployment strategies focus on accuracy, throughput, and determinism rather than latency.

Common patterns

- Recreate strategy – Stop the old batch job, run the new job, validate results, and resume. Because batch jobs run offline, it is easier to roll back if issues occur.

- Blue‑green for pipelines – Use separate storage or data partitions for new outputs. After verifying the new job, switch downstream systems to read from the new partition. If an error is discovered, revert to the old partition.

- Checkpointing and snapshotting – Large batch jobs should periodically save intermediate states. This allows recovery if the job fails halfway and speeds up experimentation.

Expert insights

- Validate output differences – Compare the new job’s outputs with the old job. Even minor changes can impact downstream systems. Use statistical tests or thresholds to decide whether differences are acceptable.

- Optimize resource usage – Schedule batch jobs during low‑traffic periods to minimize cost and avoid competing with real‑time workloads.

- Clarifai tip – Clarifai offers batch processing capabilities via its platform. You can run large image or text processing jobs and get results stored in Clarifai for further downstream use. The platform also supports file versioning so you can keep track of different model outputs.

Edge AI & federated learning: privacy and latency

As billions of devices come online, Edge AI has become a crucial deployment scenario. Edge AI moves computation closer to the data source, reducing latency and bandwidth consumption and improving privacy. Rather than sending all data to the cloud, devices like sensors, smartphones, and autonomous vehicles perform inference locally.

Benefits of edge AI

- Real‑time processing – Edge devices can react instantly, which is critical for augmented reality, autonomous driving, and industrial control systems.

- Enhanced privacy – Sensitive data stays on device, reducing exposure to breaches and complying with regulations like GDPR.

- Offline capability – Edge devices continue functioning without network connectivity. For example, healthcare wearables can monitor vital signs in remote areas.

- Cost reduction – Less data transfer means lower cloud costs. In IoT, local processing reduces bandwidth requirements.

Federated learning (FL)

When training models across distributed devices or institutions, federated learning enables collaboration without moving raw data. Each participant trains locally on its own data and shares only model updates (gradients or weights). The central server aggregates these updates to form a global model.

Benefits: Federated learning aligns with privacy‑enhancing technologies and reduces the risk of data breaches. It keeps data under the control of each organization or user and promotes accountability and auditability.

Challenges: FL can still leak information through model updates. Attackers may attempt membership inference or exploit distributed training vulnerabilities. Teams must implement secure aggregation, differential privacy, and robust communication protocols.

Expert insights

- Hardware acceleration – Edge inference often relies on specialized chips (e.g., GPU, TPU, or neural processing units). Investments in AI‑specific chips are growing to enable low‑power, high‑performance edge inference.

- FL governance – Ensure that participants agree on the training schedule, data schema, and privacy guarantees. Use cryptographic techniques to protect updates.

- Clarifai tip – Clarifai’s local runner allows models to run on devices at the edge. It can be combined with secure federated learning frameworks so that models are updated without exposing raw data. Clarifai orchestrates the training rounds and provides central aggregation.

Creative example

Imagine a hospital consortium training a model to predict sepsis. Due to privacy laws, patient data cannot leave the hospital. Each hospital runs training locally and shares only encrypted gradients. The central server aggregates these updates to improve the model. Over time, all hospitals benefit from a shared model without violating privacy.

Multi‑tenant SaaS and retrieval‑augmented generation (RAG)

Why multi‑tenant models need extra care

Software‑as‑a‑service platforms often host many customer workloads. Each tenant might require different models, data isolation, and release schedules. To avoid one customer’s model affecting another’s performance, platforms adopt cell‑based rollouts: isolating tenants into independent “cells” and rolling out updates cell by cell.

Retrieval‑augmented generation (RAG)

RAG is a hybrid architecture that combines language models with external knowledge retrieval to produce grounded answers. According to recent reports, the RAG market reached $1.85 billion in 2024 and is growing at 49 % CAGR. This surge reflects demand for models that can cite sources and reduce hallucination risks.

How RAG works: The pipeline comprises three components: a retriever that fetches relevant documents, a ranker that orders them, and a generator (LLM) that synthesizes the final answer using the retrieved documents. The retriever may use dense vectors (e.g., BERT embeddings), sparse methods (e.g., BM25), or hybrid approaches. The ranker is often a cross‑encoder that provides deeper relevance scoring. The generator uses the top documents to produce the answer.

Benefits: RAG systems can cite sources, comply with regulations, and avoid expensive fine‑tuning. They reduce hallucinations by grounding answers in real data. Enterprises use RAG to build chatbots that answer from corporate knowledge bases, assistants for complex domains, and multimodal assistants that retrieve both text and images.

Deploying RAG models

- Separate components – The retriever, ranker, and generator can be updated independently. A typical update might involve improving the vector index or the retriever model. Use canary or blue‑green rollouts for each component.

- Caching – For popular queries, cache the retrieval and generation results to minimize latency and compute cost.

- Provenance tracking – Store metadata about which documents were retrieved and which parts were used to generate the answer. This supports transparency and compliance.

- Multi‑tenant isolation – For SaaS platforms, maintain separate indices per tenant or apply strict access control to ensure queries only retrieve authorized content.

Expert insights

- Open‑source frameworks – Tools like LangChain and LlamaIndex speed up RAG development. They integrate with vector databases and large language models.

- Cost savings – RAG can reduce fine‑tuning costs by 60–80 % by retrieving domain-specific knowledge on demand rather than training new parameters.

- Clarifai tip – Clarifai can host your vector indexes and retrieval pipelines as part of its platform. Its API supports adding metadata for provenance and connecting to generative models. For multi‑tenant SaaS, Clarifai provides tenant isolation and resource quotas.

Agentic AI & multi‑agent systems: the next frontier

Agentic AI refers to systems where AI agents make decisions, plan tasks, and act autonomously in the real world. These agents might write code, schedule meetings, or negotiate with other agents. Their promise is enormous but so are the risks.

Designing for value, not hype

McKinsey analysts emphasize that success with agentic AI isn’t about the agent itself but about reimagining the workflow. Companies should map out the end‑to‑end process, identify where agents can add value, and ensure people remain central to decision‑making. The most common pitfalls include building flashy agents that do little to improve real work, and failing to provide learning loops that let agents adapt over time.

When to use agents (and when not to)

High‑variance, low‑standardization tasks benefit from agents: e.g., summarizing complex legal documents, coordinating multi‑step workflows, or orchestrating multiple tools. For simple rule‑based tasks (data entry), rule‑based automation or predictive models suffice. Use this guideline to avoid deploying agents where they add unnecessary complexity.

Security & governance

Agentic AI introduces new vulnerabilities. McKinsey notes that agentic systems present attack surfaces akin to digital insiders: they can make decisions without human oversight, potentially causing harm if compromised. Risks include chained vulnerabilities (errors cascade across multiple agents), synthetic identity attacks, and data leakage. Organizations must set up risk assessments, safelists for tools, identity management, and continuous monitoring.

Expert insights

- Layered governance – Assign roles: some agents perform tasks, while others supervise. Provide human-in-the-loop approvals for sensitive actions.

- Test harnesses – Use simulation environments to test agents before connecting to real systems. Mock external APIs and tools.

- Clarifai tip – Clarifai’s platform supports orchestration of multi‑agent workflows. You can build agents that call multiple Clarifai models or external APIs, while logging all actions. Access controls and audit logs help meet governance requirements.

Creative example

Imagine a multi‑agent system that helps engineers troubleshoot software incidents. A monitoring agent detects anomalies and triggers an analysis agent to query logs. If the issue is code-related, a code assistant agent suggests fixes and a deployment agent rolls them out under human approval. Each agent has defined roles and must log actions. Governance policies limit the resources each agent can modify.

Serverless inference & on‑prem deployment: balancing convenience and control

Serverless inferencing

In traditional AI deployment, teams manage GPU clusters, container orchestration, load balancing, and auto‑scaling. This overhead can be substantial. Serverless inference offers a paradigm shift: the cloud provider handles resource provisioning, scaling, and management, so you pay only for what you use. A model can process a million predictions during a peak event and scale down to a handful of requests on a quiet day, with zero idle cost.

Features: Serverless inference includes automatic scaling from zero to thousands of concurrent executions, pay‑per‑request pricing, high availability, and near‑instant deployment. New services like serverless GPUs (announced by major cloud providers) allow GPU‑accelerated inference without infrastructure management.

Use cases: Rapid experiments, unpredictable workloads, prototypes, and cost‑sensitive applications. It also suits teams without dedicated DevOps expertise.

Limitations: Cold start latency can be higher; long‑running models may not fit the pricing model. Also, vendor lock‑in is a concern. You may have limited control over environment customization.

On‑prem & hybrid deployments

According to industry forecasts, more companies are running custom AI models on‑premise due to open‑source models and compliance requirements. On‑premise deployments give full control over data, hardware, and network security. They allow for air‑gapped systems when regulatory mandates require that data never leaves the premises.

Hybrid strategies combine both: run sensitive components on‑prem and scale out inference to the cloud when needed. For example, a bank might keep its risk models on‑prem but burst to cloud GPUs for large scale inference.

Expert insights

- Cost modeling – Understand total cost of ownership. On‑prem hardware requires capital investment but may be cheaper long term. Serverless eliminates capital expenditure but can be costlier at scale.

- Vendor flexibility – Build systems that can switch between on‑prem, cloud, and serverless backends. Clarifai’s compute orchestration supports running the same model across multiple deployment targets (cloud GPUs, on‑prem clusters, serverless endpoints).

- Security – On‑prem is not inherently more secure. Cloud providers invest heavily in security. Weigh compliance needs, network topology, and threat models.

Creative example

A retail analytics company processes millions of in-store camera feeds to detect stockouts and shopper behavior. They run a baseline model on serverless GPUs to handle spikes during peak shopping hours. For stores with strict privacy requirements, they deploy local runners that keep footage on site. Clarifai’s platform orchestrates the models across these environments and manages update rollouts.

Comparing deployment strategies & choosing the right one

There are many strategies to choose from. Here is a simplified framework:

Step 1: Define your use case & risk level

Ask: Is the model user-facing? Does it operate in a regulated domain? How costly is an error? High-risk use cases (medical diagnosis) need conservative rollouts. Low-risk models (content recommendation) can use more aggressive strategies.

Step 2: Choose candidate strategies

- Shadow testing for unknown models or those with large distribution shifts.

- Canary releases for low-latency applications where incremental rollout is possible.

- Blue-green for mission-critical systems requiring zero downtime.

- Rolling updates and champion-challenger for continuous improvement in drift-heavy domains.

- Multi-armed bandits for rapid experimentation and personalization.

- Federated & edge for privacy, offline capability, and data locality.

- Serverless for unpredictable or cost-sensitive workloads.

- Agentic AI orchestration for complex multi-step workflows.

Step 3: Plan and automate testing

Develop a testing plan: gather baseline metrics, define success criteria, and choose monitoring tools. Use CI/CD pipelines and model registries to track versions, metrics, and rollbacks. Automate logging, alerts, and fallbacks.

Step 4: Monitor & iterate

After deployment, monitor metrics continuously. Observe for drift, bias, or performance degradation. Set up triggers to retrain or roll back. Evaluate business impact and adjust strategies as necessary.

Expert insights

- SRE mindset – Adopt the SRE principle of embracing risk while controlling blast radius. Rollbacks are normal and should be rehearsed.

- Business metrics matter – Ultimately, success is measured by the impact on users and revenue. Align model metrics with business KPIs.

- Clarifai tip – Clarifai’s platform integrates model registry, orchestration, deployment, and monitoring. It helps implement these best practices across on-prem, cloud, and serverless environments.

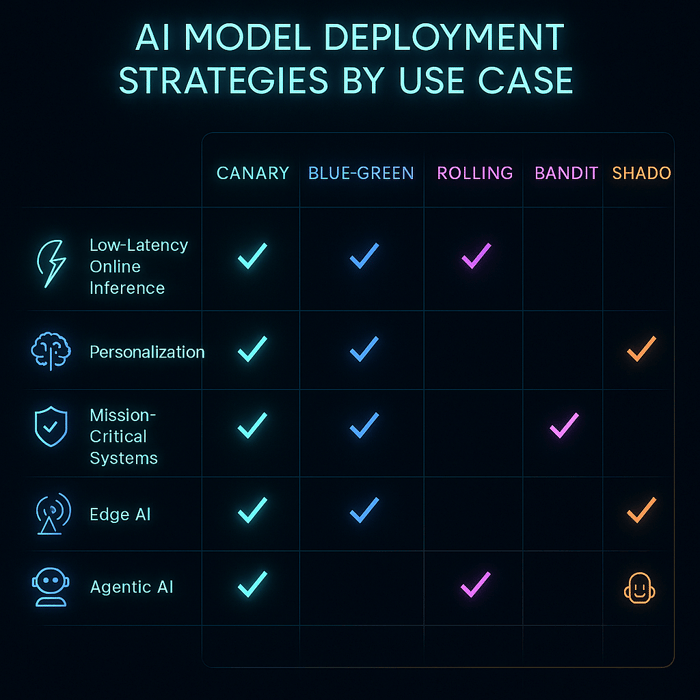

AI Model Deployment Strategies by Use Case

|

Use Case

|

Recommended Deployment Strategies

|

Why These Work Best

|

|

1. Low-Latency Online Inference (e.g., recommender systems, chatbots)

|

• Canary Deployment

• Shadow/Mirrored Traffic

• Cell-Based Rollout

|

Gradual rollout under live traffic; ensures no latency regressions; isolates failures to specific user groups.

|

|

2. Continuous Experimentation & Personalization (e.g., A/B testing, dynamic UIs)

|

• Multi-Armed Bandit (MAB)

• Contextual Bandit

|

Dynamically allocates traffic to better-performing models; reduces experimentation time and improves online reward.

|

|

3. Mission-Critical / Zero-Downtime Systems (e.g., banking, payments)

|

• Blue-Green Deployment

|

Enables instant rollback; maintains two environments (active + standby) for high availability and safety.

|

|

4. Regulated or High-Risk Domains (e.g., healthcare, finance, legal AI)

|

• Extended Shadow Launch

• Progressive Canary

|

Allows full validation before exposure; maintains compliance audit trails; supports phased verification.

|

|

5. Drift-Prone Environments (e.g., fraud detection, ad click prediction)

|

• Rolling Deployment

• Champion-Challenger Setup

|

Smooth, periodic updates; challenger model can gradually replace the champion when it consistently outperforms.

|

|

6. Batch Scoring / Offline Predictions (e.g., ETL pipelines, catalog enrichment)

|

• Recreate Strategy

• Blue-Green for Data Pipelines

|

Simple deterministic updates; rollback by dataset versioning; low complexity.

|

|

7. Edge / On-Device AI (e.g., IoT, autonomous drones, industrial sensors)

|

• Phased Rollouts per Device Cohort

• Feature Flags / Kill-Switch

|

Minimizes risk on hardware variations; allows quick disablement in case of model failure.

|

|

8. Multi-Tenant SaaS AI (e.g., enterprise ML platforms)

|

• Cell-Based Rollout per Tenant Tier

• Blue-Green per Cell

|

Ensures tenant isolation; supports gradual rollout across different customer segments.

|

|

9. Complex Model Graphs / RAG Pipelines (e.g., retrieval-augmented LLMs)

|

• Shadow Entire Graph

• Canary at Router Level

• Bandit Routing

|

Validates interactions between retrieval, generation, and ranking modules; optimizes multi-model performance.

|

|

10. Agentic AI Applications (e.g., autonomous AI agents, workflow orchestrators)

|

• Shadowed Tool-Calls

• Sandboxed Orchestration

• Human-in-the-Loop Canary

|

Ensures safe rollout of autonomous actions; supports controlled exposure and traceable decision memory.

|

|

11. Federated or Privacy-Preserving AI (e.g., healthcare data collaboration)

|

• Federated Deployment with On-Device Updates

• Secure Aggregation Pipelines

|

Enables training and inference without centralizing data; complies with data protection standards.

|

|

12. Serverless or Event-Driven Inference (e.g., LLM endpoints, real-time triggers)

|

• Serverless Inference (GPU-based)

• Autoscaling Containers (Knative / Cloud Run)

|

Pay-per-use efficiency; auto-scaling based on demand; great for bursty inference workloads.

|

Expert Insight

- Hybrid rollouts often combine shadow + canary, ensuring quality under production traffic before full release.

- Observability pipelines (metrics, logs, drift monitors) are as critical as the deployment method.

- For agentic AI, use audit-ready memory stores and tool-call simulation before production enablement.

- Clarifai Compute Orchestration simplifies canary and blue-green deployments by automating GPU routing and rollback logic across environments.

- Clarifai Local Runners enable on-prem or edge deployment without uploading sensitive data.

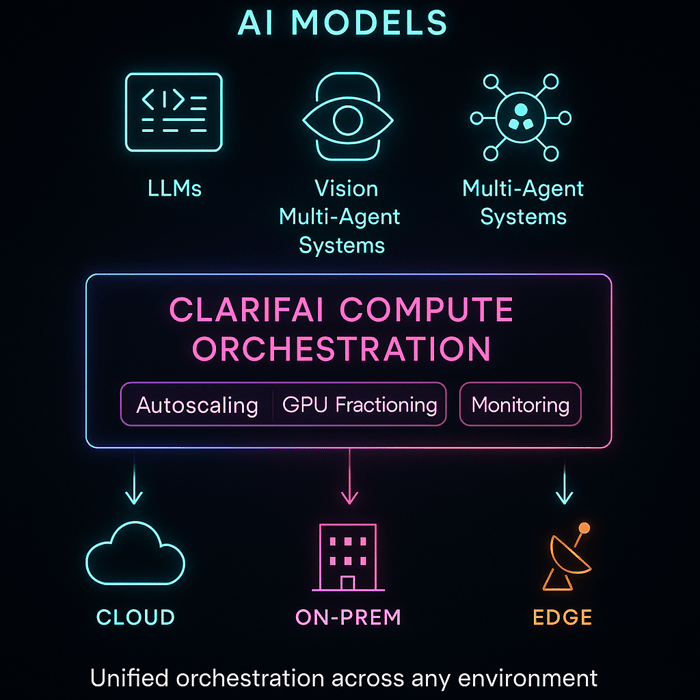

How Clarifai Enables Robust Deployment at Scale

Modern AI deployment isn’t just about putting models into production — it’s about doing it efficiently, reliably, and across any environment. Clarifai’s platform helps teams operationalize the strategies discussed earlier — from canary rollouts to hybrid edge deployments — through a unified, vendor-agnostic infrastructure.

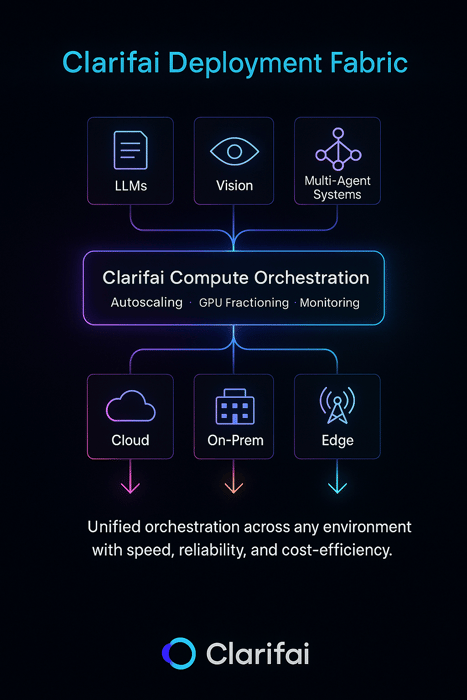

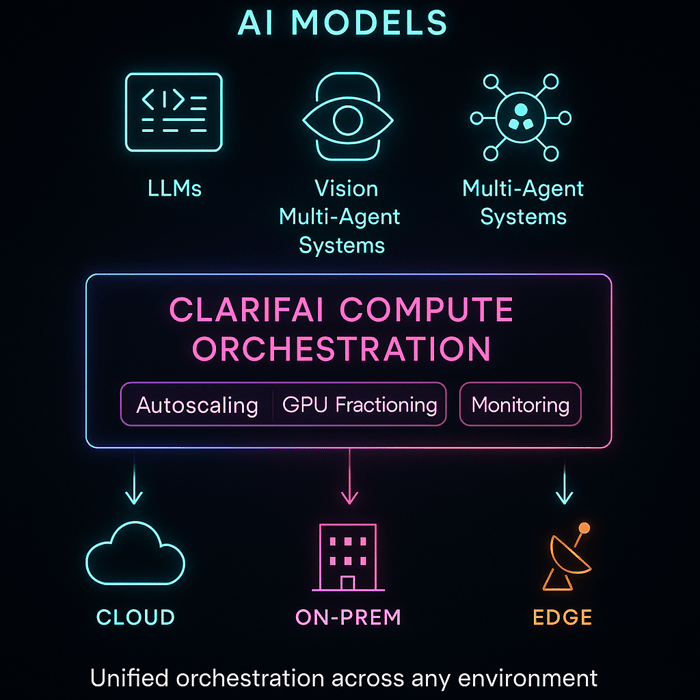

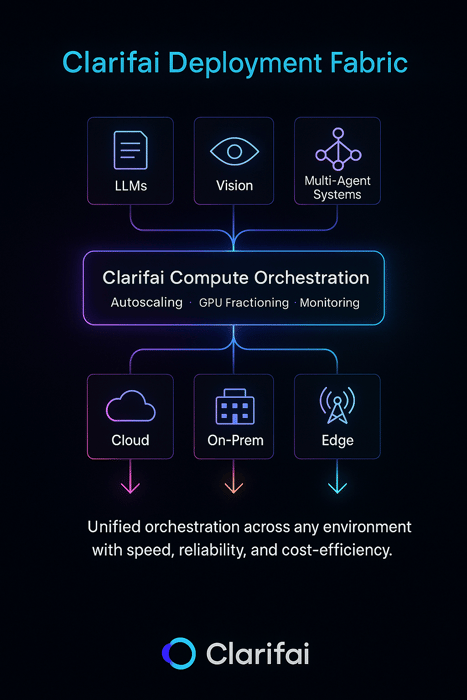

Clarifai Compute Orchestration

Clarifai’s Compute Orchestration serves as a control plane for model workloads, intelligently managing GPU resources, scaling inference endpoints, and routing traffic across cloud, on-prem, and edge environments.

It’s designed to help teams deploy and iterate faster while maintaining cost transparency and performance guarantees.

Key advantages:

- Performance & Cost Efficiency: Delivers 544 tokens/sec throughput, 3.6 s time-to-first-answer, and a blended cost of $0.16 per million tokens — among the fastest GPU inference rates for its price.

- Autoscaling & Fractional GPUs: Dynamically allocates compute capacity and shares GPUs across smaller jobs to minimize idle time.

- Reliability: Ensures 99.999% uptime with automatic redundancy and workload rerouting — critical for mission-sensitive deployments.

- Deployment Flexibility: Supports all major rollout patterns (canary, blue-green, shadow, rolling) across heterogeneous infrastructure.

- Unified Observability: Built-in dashboards for latency, throughput, and utilization help teams fine-tune deployments in real time.

“Our customers can now scale their AI workloads seamlessly — on any infrastructure — while optimizing for cost, reliability, and speed.”

— Matt Zeiler, Founder & CEO, Clarifai

AI Runners and Hybrid Deployment

For workloads that demand privacy or ultra-low latency, Clarifai AI Runners extend orchestration to local and edge environments, letting models run directly on internal servers or devices while staying connected to the same orchestration layer.

This enables secure, compliant deployments for enterprises handling sensitive or geographically distributed data.

Together, Compute Orchestration and AI Runners give teams a single deployment fabric — from prototype to production, cloud to edge — making Clarifai not just an inference engine but a deployment strategy enabler.

Frequently Asked Questions (FAQs)

- What is the difference between canary and blue-green deployments?

Canary deployments gradually roll out the new version to a subset of users, monitoring performance and rolling back if needed. Blue-green deployments create two parallel environments; you cut over all traffic at once and can revert instantly by switching back.

- When should I consider federated learning?

Use federated learning when data is distributed across devices or institutions and cannot be centralized due to privacy or regulation. Federated learning enables collaborative training while keeping data localized.

- How do I monitor model drift?

Monitor input feature distributions, prediction distributions, and downstream business metrics over time. Set up alerts if distributions deviate significantly. Tools like Clarifai’s model monitoring or open-source solutions can help.

- What are the risks of agentic AI?

Agentic AI introduces new vulnerabilities such as synthetic identity attacks, chained errors across agents, and untraceable data leakage. Organizations must implement layered governance, identity management, and simulation testing before connecting agents to real systems.

- Why does serverless inference matter?

Serverless inference eliminates the operational burden of managing infrastructure. It scales automatically and charges per request. However, it may introduce latency due to cold starts and can lead to vendor lock-in.

- How does Clarifai help with deployment strategies?

Clarifai provides a full-stack AI platform. You can train, deploy, and monitor models across cloud GPUs, on-prem clusters, local devices, and serverless endpoints. Features like compute orchestration, model registry, role-based access control, and auditable logs support safe and compliant deployments.

Conclusion

Model deployment strategies are not one-size-fits-all. By matching deployment techniques to specific use cases and balancing risk, speed, and cost, organizations can deliver AI reliably and responsibly. From shadow testing to agentic orchestration, each strategy requires careful planning, monitoring, and governance. Emerging trends like serverless inference, federated learning, RAG, and agentic AI open new possibilities but also demand new safeguards. With the right frameworks and tools—and with platforms like Clarifai offering compute orchestration and scalable inference across hybrid environments—enterprises can turn AI prototypes into production systems that truly make a difference.